Managing the risks of agentic AI

There are numerous risks involved in the use of AI that don’t always appear on the surface

Add bookmark

In 2022, Jake Moffatt bought an urgent flight with Air Canada to rush home for his grandmother’s funeral, assured by the customer service artificial intelligence (AI) he’d been chatting to that he was eligible for a discount by way of refund once he’d dealt with the family commitments.

Except the AI was wrong. When Moffatt applied for the refund, Air Canada refused. In 2024, a tribunal declared that the chat bot’s statements were “negligent misrepresentation” and awarded Moffatt both the refund and compensation.

Many industries are rushing to deploy agentic AI but as the Moffatt case shows, it’s not a flawless technology, nor one without significant risks. While we explore the new horizons of AI, it’s important to consider what your organization’s risk appetite is for these tools and how your processes can help mitigate them.

Counting the cost

AI technologies are becoming more affordable, but the costs of agentic AI go well beyond the initial investment. AI tools require significant server capacity and that has an ongoing cost. There’s also the need for training the tool out-of-the-box and potentially retraining or modifying its behavior as it evolves through use. Those exercises need to be accounted for in the initiative to have a fair gauge of the tools return on investment (ROI).

The rollout processes for agentic AI need to have checkpoints in place that monitor these costs. Building in dashboards and reporting that captures the real investment of time and resources will help manage any potential budget blowouts and ensure that the technology is performing economically. Systematic cost monitoring will help keep the initiative on track and provide a reference point for future rollouts.

Checking the locks

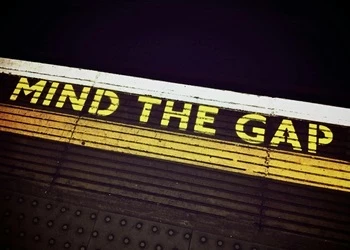

AI agents operate off a complex set of instructions and information, but they’re not foolproof. A New York-based job seeker put a fragment of code in his LinkedIn profile, instructing any AI bots contacting him to provide a flan recipe in their message – and they did. While an AI agent is intended to provide certain information in an efficient and accessible way, there is a risk that it could divulge more than intended, or worse, users could inject code into the AI to instruct the agent to behave in a new manner.

This potential requires careful cybersecurity processes built into the AI workflow. Technical specialists should check the instructions the agents work from and perform security tests to close any potential attack vectors. There should be regular checks on the information being given out by the AI and clear escalation procedures in place for the agents to forward suspicious or complex cases to human operators.

Confirming the facts

As the Air Canada example shows, sometimes AI will be confidently incorrect. The phenomenon of ‘AI hallucinations’ is very real and can expose an organization to both reputational damage and legal repercussions.

The European Union’s AI Act has been rolling out since mid-2024 and holds businesses accountable for the activity and use of AI in numerous capacities, which exposes those organizations to significant fines. While the point of agentic AI is to efficiently and effortlessly provide information and guide customers, the content that it uses needs to be carefully monitored.

The key here is in building validation steps into automated processes and AI workflows. Audit trails allow you to trace the flow of information and pinpoint where any potential issues may occur. Where agentic AI is responsible for providing advice or instruction, there should be ‘human-in-the-loop’ processes that ensure correct information is delivered or decisions are signed off before being presented to customers.

Again, the instructions and data that the AI is drawing from should be audited regularly to ensure that it understands what is actually true for your business, rather than imagining its own solutions.

There are numerous other risks involved in the use of AI that don’t appear on the surface. AI tools are notorious for their high-power demands. How does that fit with your organization’s sustainability commitments? People are still getting used to dealing with AI on a regular basis, so consider how your customers will respond and how the use of agentic AI reflects on your brand and culture.

None of these are insurmountable, but they require an understanding of the potential risks and a willingness to embrace them through clear and effective processes. AI isn’t going away anytime soon, but before we jump on the bandwagon, we should all consider just how ready our business is for it.

If you’d like to take a deeper look at the dimensions that shape true AI-readiness and the processes that help leaders navigate these risks, I invite you to explore our full Global Process Excellence and AI-Readiness Report 2025.